In the last post we looked at how TOP and MAX operators compared. We saw the execution plan for a MAX function used a Stream Aggregate operator which is one of two which we can use for aggregation

I wanted to look at the two operators and how they perform the same tasks in different ways. The way they function is key to understanding why the engine may choose to use one over the other and the impact this can have on the performance of a query.

The two operators in question: the Hash Match (Aggregate) and Stream Aggregate

To demonstrate these operators I’ll use a the query below on the Votes data from StackOverflow and use relevant hints to show specific scenarios:

SELECT VoteTypeId, COUNT(1)

FROM dbo.Votes

GROUP BY VoteTypeId;

Hash match

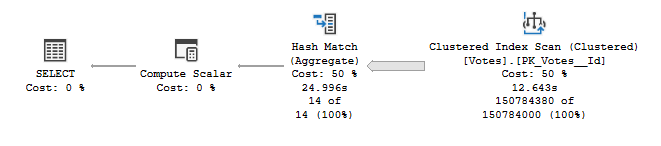

We’ll start with a look at the Hash Match (Aggregate) operator, by hinting to force the operator and use a single core. Let’s check the result:

SELECT VoteTypeId, COUNT(1)

FROM dbo.Votes

GROUP BY VoteTypeId

OPTION (MAXDOP 1, HASH GROUP);

A Hash Match operator will take the input data, hash the key (VoteTypeId in our case), and keep a running total for the value we’re aggregating. It doesn’t need to be concerned with what the key is or how the data is ordered.

This operator has an increased cost as each record hitting it will need to have its key value hashed before it can be matched with existing keys in a hash table. This will make it less efficient from a CPU perspective. In this example it took around 25s to execute.

In terms of memory this operator is quite efficient as it doesn’t need to persist data other than the hash table of running values. With this plan the data will be read, hashed and then can be disregarded. This resulted in a memory grant of only 1mb being needed.

Stream Aggregate

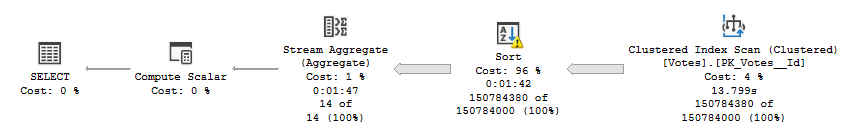

We’ll take a look at the alternative operator, the Stream Aggregate. This will provide the same aggregation we need but in a different way. We’ve again hinted the query to force the required operator as follows:

SELECT VoteTypeId, COUNT(1)

FROM dbo.Votes

GROUP BY VoteTypeId

OPTION (MAXDOP 1, ORDER GROUP);

Immediately we can see a difference in this plan in that we now have a Sort operator added. The reason for this is that a Stream Aggregate will need the input sorted if it’s grouping data. As our data isn’t coming out of the index sorted it’s a necessary evil in the plan.

With the addition of the Sort operator it pushes up the processing for this approach considerably. It’s a lot of up front work to do. In this instance it rose to 104s to execute the query.

Due to the data all being required in memory to have it sorted our memory grant also grew considerably. This plan now requires 1.7gb to execute. Even with that it still ended up spilling another 1gb too.

This isn’t looking so good for the Stream Aggregate Operator.

Au naturel

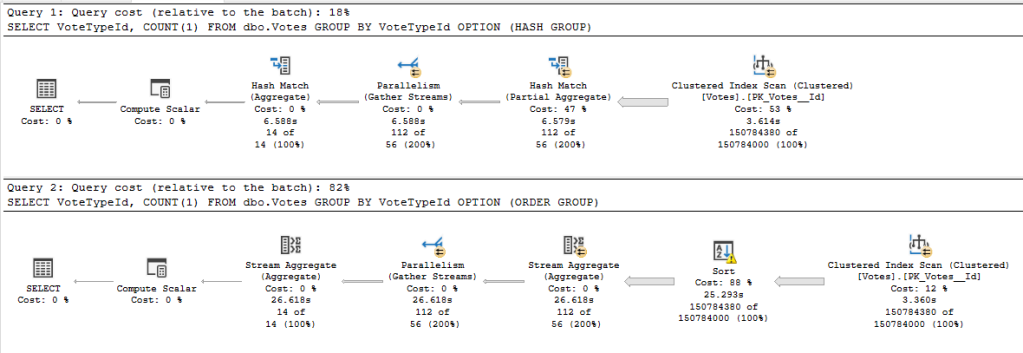

We’re restricting these queries with the hints so let’s try to remove those. We’ll start with the MAXDOP to allow them to go parallel:

The elapsed time for the queries reduces however total CPU time is proportionally higher. Memory grants are similar and the spill is still present from the Sort operator. Parallelism doesn’t really change the results we’re seeing.

Let’s remove the shackles entirely and see how the query runs:

SELECT VoteTypeId, COUNT(1)

FROM dbo.Votes

GROUP BY VoteTypeId;

Without those hints being used we can see the preferred execution plan actually uses both of the operators.

Each of the threads for the query is performing a partial aggregate to reduce the amount of memory required for processing. We have the overhead of the hashing process to build up the data but we can aggregate the source data to a point and reduce the amount which needs to be sorted.

We can then sort a smaller amount of data which will be less impactful to the plan. Once the smaller amount of data is sorted we can use a Stream Aggregate which is more efficient for aggregating than needing to hash the data again if another Hash Match had been used.

Using both operators in conjunction makes a very effective plan overall. On my environment this plan is equivalent or better than the pure Hash Match plan above in terms of CPU, elapsed time, and memory grant.

Ordered Data

We’ve been a bit unfair to the Stream Aggregate operator in this comparison. This operator requires the input to be ordered whereas the Hash Match is more flexible and doesn’t have those limitations.

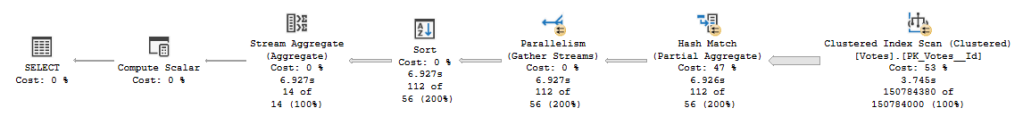

Let’s give the Stream Aggregate some time to shine and add an index to give it a fighting chance:

CREATE INDEX VoteTypeId

ON dbo.Votes (VoteTypeId);

…and now the same query:

SELECT VoteTypeId, COUNT(1)

FROM dbo.Votes

GROUP BY VoteTypeId;

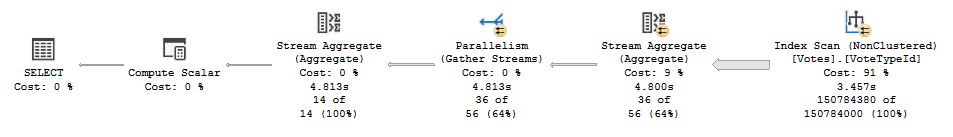

Now that the data is ordered the query plan doesn’t use a Hash Match operator at all. With the index outputting in the required order the Sort operator no longer needs to be included either.

The previous Hash Match operator has been replaced with a Stream Aggregate which is performing partial aggregation across multiple threads. Its function is the same, however it’ll have less overhead due to not needing the data hashed as its being aggregated.

The result of this new plan is a lower execution time, less CPU time, and a much lower memory grant – in addition to less data read as it’s a smaller copy of the data in the index.

Wrap up

In this post we’ve looked at how the Hash Match and Stream Aggregate operators can both handle the same query in different ways.

Hash Match operators can take unordered input, hash the key fields and aggregate the data. This has the advantage of not needing to pre-sort the data, however does have an overhead of needing to hash the keys as it receives data.

The Stream Aggregate operator on the other hand needs data sorted before it can be aggregated which can result in a Sort operator being introduced into the plan. The operator itself is good at aggregating but the introduction of a Sort can massively outweigh its benefits.

We also saw how the engine can provide efficient plans through the use of both operators together, by using the benefits from each to create an optimal solution.

Finally we demonstrated that where we’re able to provide the query with ordered data we’re able to use the more efficient Stream Aggregate operator for even greater performance.

One reply on “Understanding Aggregate Operators”

[…] Andy Brownsword rounds up the usual suspects: […]